PRISMLAYERS: Open Data for High-Quality Multi-Layer Transparent Image Generative Models

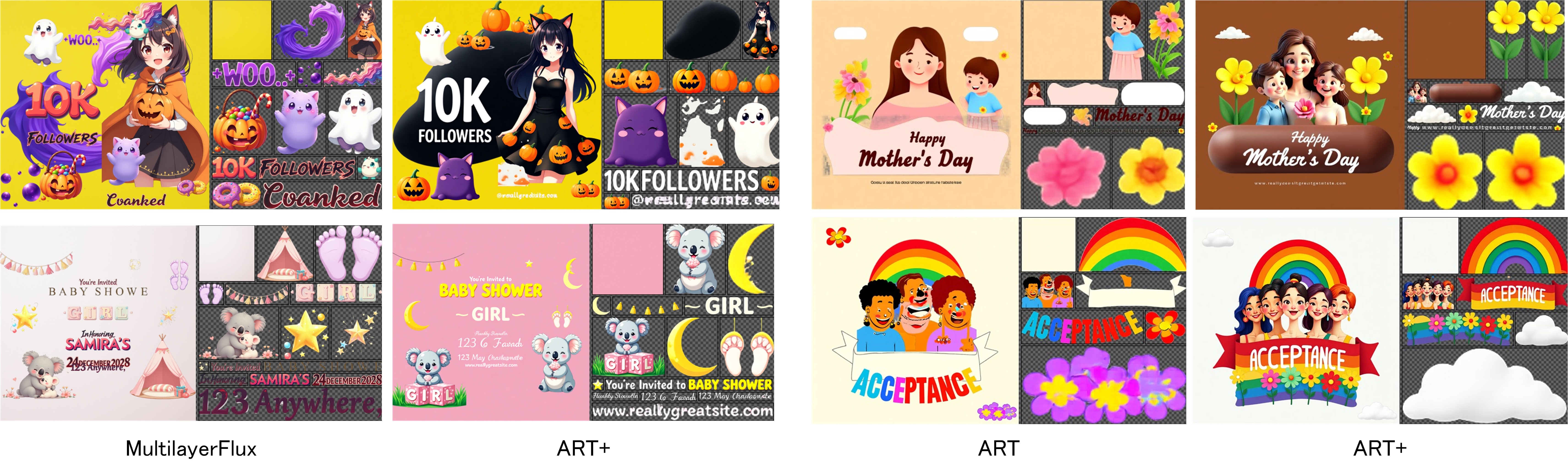

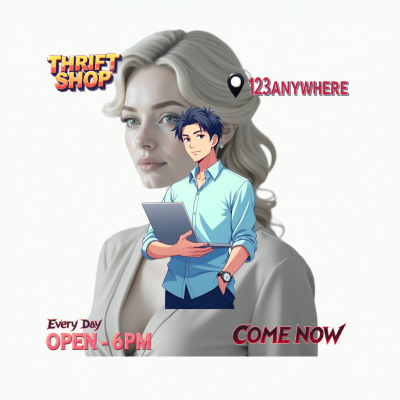

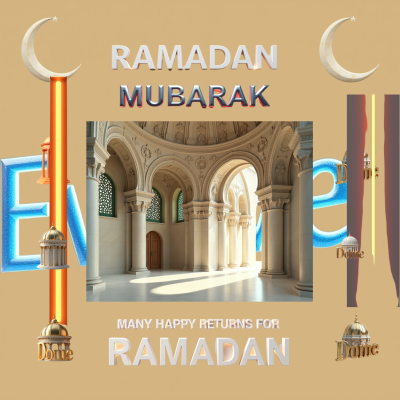

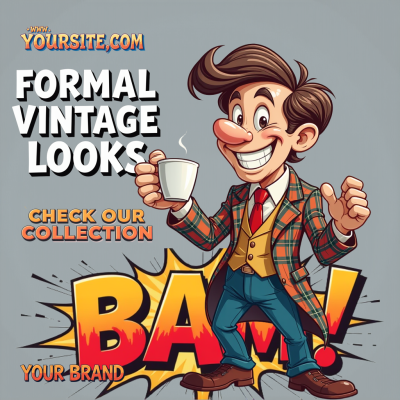

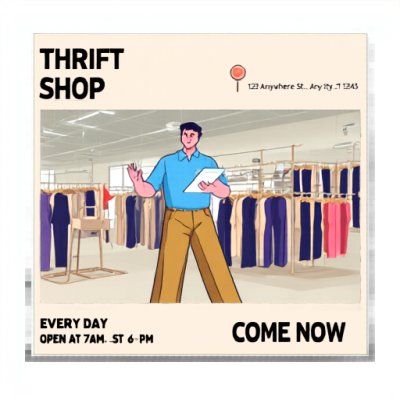

Multi-layer transparent images with different styles generated by our data engine

Abstract

Generating high-quality, multi-layer transparent images from text prompts can unlock a new level of creative control, allowing users to edit each layer as effortlessly as editing text outputs from LLMs. However, the development of multi-layer generative models lags behind that of conventional text-to-image models due to the absence of a large, high-quality corpus of multi-layer transparent data. In this paper, we address this fundamental challenge by:

(i) releasing the first open, ultra–high-fidelity PrismLayers (PrismLayersPro) dataset of 200K (20K) multi- layer transparent images with accurate alpha mattes,

(ii) introducing a training- free synthesis pipeline that generates such data on demand using off-the-shelf diffusion models,

(iii) delivering a strong, open-source multi-layer generation model, ART+, which matches the aesthetics of modern text-to-image generation models.

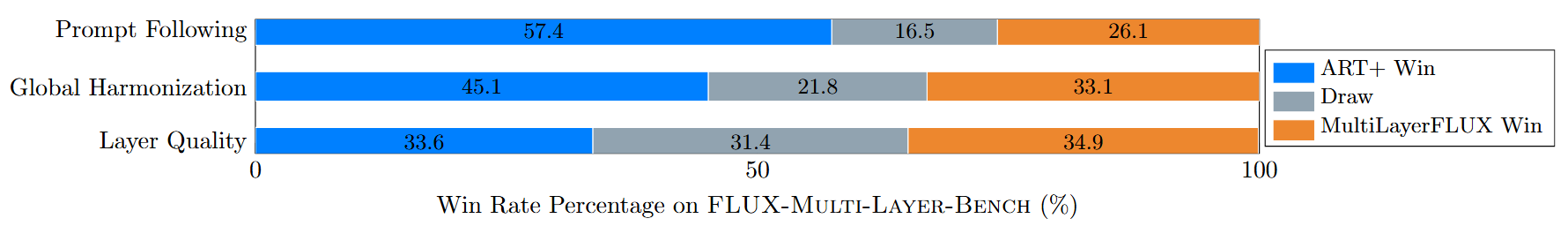

The key technical contributions include: LayerFLUX, which excels at generating high-quality single transparent layers with accurate alpha mattes, and MultiLayerFLUX, which composes multiple LayerFLUX outputs into complete images, guided by human-annotated semantic layout. To ensure higher quality, we apply a rigorous filtering stage to remove artifacts and semantic mismatches, followed by human selection. Fine-tuning the state-of-the-art ART model on our synthetic PrismLayersPro yields ART+, which outperforms the original ART in 60% of head-to-head user study comparisons and even matches the visual quality of images generated by the FLUX.1-[dev] model. We anticipate that our work will establish a solid dataset foundation for the multi-layer transparent image generation task, enabling research and applications that require precise, editable, and visually compelling layered imagery.

Method Overview

Dataset Curation Pipeline of PrismLayers and PrismLayersPro

Key dataset statistics on PrismLayers and PrismLayersPro

LayerFLUX and MultiLayerFLUX Framework

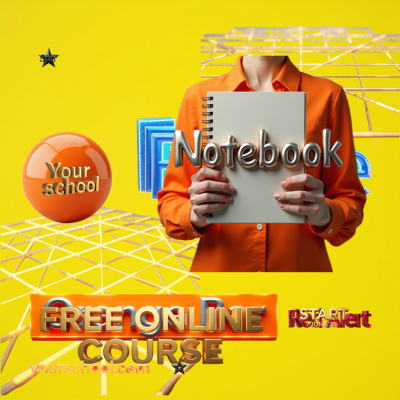

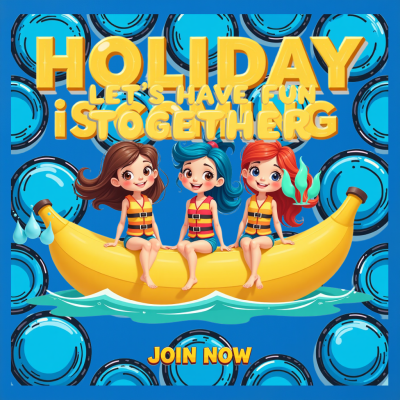

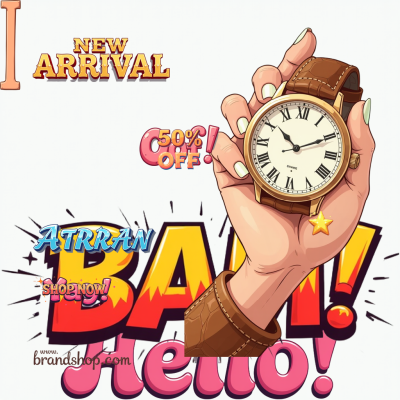

Qualitative Results

Qualitative Results of LayerFLUX

Qualitative Results of ART+

Qualitative comparison results between FLUX.1-[dev] (1st row), MultiLayerFLUX (2nd row), ART (3rd row), and ART+ (4th row)

Quantitative Results

Transparent Image Quality Assessment

We develop a dedicated Transparent Image Preference Scoring (TIPS) model fine-tuned on a large win–lose dataset to reliably evaluate and rank the aesthetic quality of generated transparent layers and composites.

Transparent Image Quality Assessment

Transparent Image Quality Assessment

Our MultiLayerFLUX composes multiple LayerFLUX-generated transparent layers according to a semantic layout—either extracted from a reference or produced by an LLM—ensuring precise spatial control and preserving each layer’s visual fidelity. We fine-tune ART with our synthesized data to improve its layer-wise aesthetic quality and overall visual appeal.

Dataset Sample

This is a neon graffiti style image. The image presents a vibrant and playful background in a bright blue hue, reminiscent of a clear sky. This cheerful backdrop sets a whimsical tone, enhancing the overall festive atmosphere of the composition. The blue is uniform, providing a striking contrast to the other elements that populate the scene. At the center of the image, a large, cartoonish crescent moon is depicted, featuring a textured surface that suggests depth and detail, with dark craters and a glossy finish. Surrounding the moon are fluffy, stylized clouds in varying shades of blue, which add a sense of lightness and playfulness to the scene. Scattered throughout the clouds are several cute, ghostly figures, each with a friendly expression. These ghosts are predominantly white with subtle pink accents, giving them a charming and non-threatening appearance. Their playful poses suggest movement, as if they are floating joyfully in the air. Adding to the whimsical nature of the image, small, colorful stars in shades of yellow, orange, and red are sprinkled around the moon and clouds. These stars enhance the magical feel of the scene, creating a sense of wonder and excitement. Prominently displayed at the bottom of the image is the text, "WHEN GHOSTS ARE IN FLIGHT, IT'S FRIGHT NIGHT!" The font is bold and playful, rendered in a bright yellow color that stands out against the blue background. The letters have a dripping effect, reminiscent of melting wax, which adds a fun, spooky touch appropriate for a Halloween theme. The text is large and centrally aligned, ensuring it captures the viewer's attention immediately. The artistic style of the image is cartoonish and cheerful, leaning towards a digital illustration aesthetic. The use of soft edges and bright colors contributes to a lighthearted and festive mood, making it suitable for a family-friendly Halloween celebration. The overall theme of the image is playful and festive, evoking feelings of joy and excitement associated with Halloween. The mood is light and fun, rather than scary, making it ideal for children or family-oriented events. In terms of symbolism, the ghosts represent the playful side of Halloween, while the crescent moon and stars evoke a sense of magic and wonder. This image could be effectively used for various contexts, such as Halloween party invitations, decorations, or social media posts aimed at celebrating the holiday in a fun and engaging way.BibTeX

@article{chen2025prismlayersopendatahighquality,

title={PrismLayers: Open Data for High-Quality Multi-Layer Transparent Image Generative Models},

author={Junwen Chen and Heyang Jiang and Yanbin Wang and Keming Wu and Ji Li and Chao Zhang and Keiji Yanai and Dong Chen and Yuhui Yuan},

journal={arXiv preprint arXiv:2505.22523},

year={2025},

}